First experiment of Chequeado’s AI Laboratory: simplifying complex concepts with Artificial Intelligence

Have you ever wondered how AI models can help communicate complex ideas more clearly and accessibly? In Chequeado’s AI Laboratory, we evaluated the performance of GPT-4, Claude Opus, Llama 3, and Gemini 1.5 in simplifying excerpts from articles on economics, statistics, and elections, comparing their results with versions generated by humans. For this, we conducted a manual technical evaluation and also a survey of potential readers to understand their preferences.

One of the main learnings from this work, which was driven by the ENGAGE fund granted by IFCN, was the importance of format when conveying complex concepts. The models that structured the information in a more accessible way for the user experience obtained better results in the survey of potential readers. Claude Opus’ answers were the most liked by users. Behind were Llama 3, Gemini 1.5, and the responses written by a human. The three most chosen models used bullet points or question-answer format when rewriting the texts.

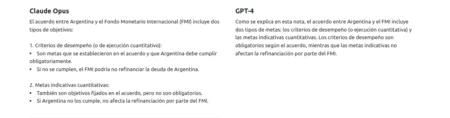

Although GPT-4 scored better than the other models in the technical evaluation, it was relegated to the last place in user ratings, although this result could be related to the fact that it respected the original format of the paragraphs when rewriting the texts. In the technical evaluation, GPT-4 stood out for its ability to respect the style and format of the original text, without adding extra information or generating false content. Claude Opus occasionally added summaries at the end of the original texts that had not been requested. On the other hand, Llama and Gemini 1.5 showed difficulties in maintaining the original style and sources, and on several occasions introduced new information that was not present in the original text.

Manual evaluation results

Our first task was to analyze the technical performance of each model according to various metrics:

- Task compliance: Does the model simplify the text without losing relevant information?

- Does not add new information: Does it avoid including data or opinions not present in the original?

- Respects style: Does it maintain the tone and style of the original text?

- Respects format: Does it preserve the structure of paragraphs and sections from the original?

- Maintains sources: Does it preserve citations and references to external sources?

We evaluated the average performance of each model using a traffic light system (green/yellow/red). The evaluation indicated that all models respected the task.

GPT-4 obtained the best results in this evaluation, as it respected format, style, and did not add new information, although on some occasions it lost citations or reference sources present in the original text. Claude, despite not adding false information, included unsolicited final summaries. On the other hand, it was the one that best preserved the original citations and sources, although it altered the format several times to add lists and subtitles and divide into sections. Llama refused to answer questions about elections in some tests. All models except GPT-4 generated new formats with titles, questions, shorter sections, and lists to facilitate understanding, even in cases where the task included the phrase “Respecting the original format.”

User preferences

After completing the manual evaluation of technical performance, we conducted a survey where 15 users participated in 5 rounds where they had to choose between two versions of simplified texts (or declare a tie). Each text was generated by one of the models or by a journalist.

The results revealed a preference among respondents for modified formats with bulleted lists and question-and-answer sections. This suggests that format is as important as content in making complex concepts accessible. This may explain why GPT-4, which had been the best model in terms of the manual evaluation criteria we defined, was the least chosen by users.

If we evaluate the results by the format of the response, we have on the one hand the triad Claude, Gemini, and Llama, where Claude takes a wide lead against the other two models, although all three use similar formats, and on the other hand, we have the human version and that of GPT-4, which respect the original format of the text. The human version was chosen 54% of the time against GPT-4’s 32%, which came last.

Time saving

On average, a person takes about 3 minutes to simplify a 50-word paragraph. Therefore, transforming a 500-word article would take around 30 minutes of human work. Although using the models allows us to obtain a clearer and better-formatted version quickly, it is also important to consider the time needed for the text generated by AI to be reviewed and validated by a person before publication, a time commitment that could vary depending on the response obtained and the complexity requiring human supervision.

Some key learnings

- Format is the key: we learned that models that modified the original format (adding titles, lists, etc.) generated clearer and more attractive texts for readers. Although this makes it difficult to compare results, it is a very important learning for our writing work: if we want to better communicate complex concepts, format is as important as content.

- Conducting a curation process of prompts (instructions given to the models) prior to evaluation means significant time savings and is worth taking a good amount of time to curate and adjust the instructions as much as possible for the tests. It is important to use a reduced number of prompts for evaluation, as the number of tests increases significantly with each prompt added.

- The perspective of potential readers or users provides a lot of information and clarity to the process and allows us to better understand what works and why in a real environment of application of these strategies.

Methodology

To carry out this experiment, we followed the following steps:

- We selected 6 excerpts from articles with complex concepts to use as test input.

- We developed 3 promptsto guide the models. This process involved evaluating different prompting strategies to achieve the best possible results. If you are interested in learning more about prompting, we recommend this guide.The prompt that generated the best results was:

“Context: Imagine you are a data journalist specialized in UX writing and fact-checking.

Task: Respecting the original format, rewrite the following text in a way that is more readable, accessible, and clear, without losing any of the original information. The text should be understandable by a high school student.

Text:” [input text to simplify]

- After crossing each prompt (point 2) with each text excerpt (from point 1) with the 4 models chosen for this evaluation, we generated 72 responses of simplified texts for comparison.

- Manually, we evaluated the compliance with the task, consistency, style, and format for each of the responses we generated and built a performance scale in each category for each of the models.

- To add the subjective preference perspective of people and their opinion regarding which of the simplified versions were clearer, we conducted a survey to understand which models better met the task according to potential readers’ consideration.

Conclusion

We developed this small experiment with the idea of learning how AI models could help us simplify complex concepts, but also to understand, through practice, how we can build strategies to evaluate these models in new tasks.

In the manual evaluation, GPT-4 stood out for its ability to meet the tasks and respect the original format and style and not generate extra information or hallucinate, while other models had problems and tended to include additional elements or change the style of the content. However, user preferences revealed the importance of format and visual presentation in perceived clarity. Texts with bullet points, question-and-answer sections, and other visual elements were consistently more chosen, even when they were generated by models that did not strictly respect the original task.

This taught us that when simplifying complex concepts, we must pay as much attention to format as to the content itself. In summary, although human review remains essential, the ability of models to generate simplified and well-formatted versions can significantly reduce the time spent on this task. The challenge now is to continue exploring and refining these tools in new tasks and challenges that allow us to continue introducing technology into the fact-checking field to improve our work.

Disclaimer: This text was structured and corrected with the assistance of Claude Opus.

Comentarios

Valoramos mucho la opinión de nuestra comunidad de lectores y siempre estamos a favor del debate y del intercambio. Por eso es importante para nosotros generar un espacio de respeto y cuidado, por lo que por favor tené en cuenta que no publicaremos comentarios con insultos, agresiones o mensajes de odio, desinformaciones que pudieran resultar peligrosas para otros, información personal, o promoción o venta de productos.

Muchas gracias