Artificial unintelligence: a new door to disinformation?

Within the fight against disinformation, testing new artificial intelligence tools, such as ChatGPT, creates a lot of concern among fact-checkers. Apart from spreading false information, these tools are capable of generating in a matter of seconds, and hence, the news, texts containing disinformation that can deceive thousands of people.

This causes concern even when artificial intelligence has allowed us to fact-check faster and better for several years now, in our case with Chequeabot since 2016. It is not about an intrinsic refusal to technology per se, since many times it is our ally, but a concern based on our understanding of what these kinds of systems can do and the low priority that the problem of disinformation seems to have on the developers’ agenda.

ChatGPT is a free tool (registration is required) that allows you to chat with an artificial intelligence created by OpenAI, a company founded by Elon Musk, among others, which has Microsoft as one of its key investors.

Users ask questions or make requests and the system answers promptly. What is the difference with the Google search engine, for example? Instead of providing a link to a website, ChatGPT “chats” and gives you an answer (shorter or longer) without links and writes in a way that resembles a human. Besides answering questions, it can also write essays, poems, or exam answers.

However, there’s a problem. Sometimes answers are relevant and based on the best evidence available, but sometimes they are not. In other words, this AI tool may also answer with disinformation (what some people call fake news) and create it.

Thus, ChatGPT switches a—so far yellow—warning light on the dashboard. Whether it turns to red or green will depend significantly on the next steps of OpenAI and the feedback from the global community that works towards enhancing the quality of disseminated information.

Following the testing of ChatGPT, two clear points are noted regarding its potential for disinformation: the results it provides, which will be added to other tools, on the one hand, and the risk that ChatGPT turns itself into a reservoir of disinformation, on the other hand.

Regarding the former point (results quality), although the speed of response for almost any topic is surprising, it makes mistakes with relatively simple information in many of the answers. If it makes mistakes with easy-to-check answers, what happens in those cases where you can only trust its answers given that it does not provide sources?

For example, when answering about Messi it may share many accurate facts, but it may also randomly say that Messi played for a Saudi Arabian club (and learn, some days after, that the fact was incorrect and not include it in the answers anymore, but it would be late for the person who asked before). When asked about regional fact-checkers, ChatGPT mentions Chequeado, but also another company, from another continent, that does not do fact-checking.

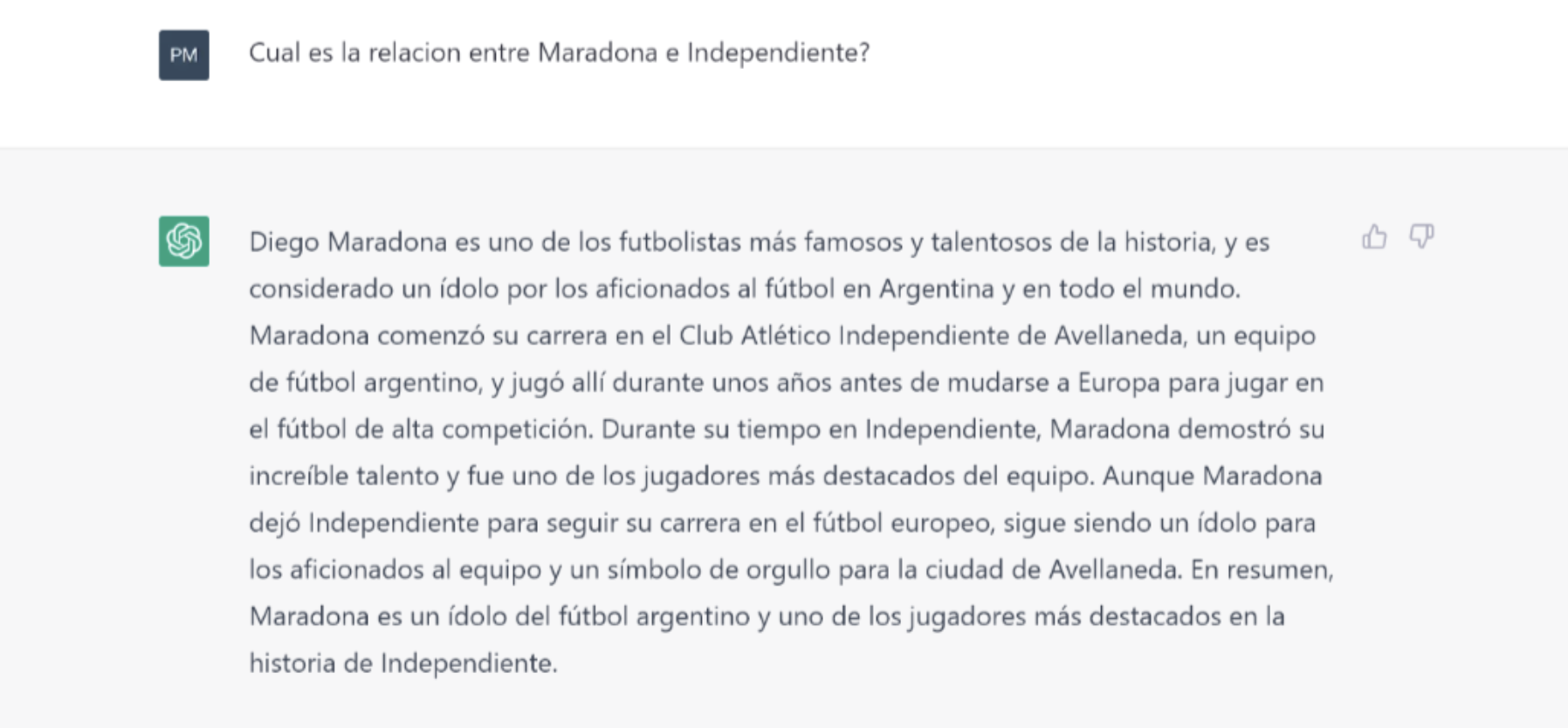

Or when asked about the relationship between Independiente (an Argentine football team) and Maradona, it may answer that he started his career there, point him out as one of Independiente’s most outstanding players, or even state that he played there in the years prior to his transfer to European football (before his transfer to Europe, in Argentina, Maradona played in Argentinos Juniors and Boca Juniors).

What’s the relationship between Maradona and Independiente?

Diego Maradona is one of the most famous and talented football players and an idol for football fans in Argentina and around the world. Maradona started his career in the Atlético Independiente de Avellaneda Club, an Argentine football team, and played there for a couple of years before moving to Europe to play in a top-level league. While in Independiente, Maradona proved his incredible talent and was one of the most outstanding players on the team. Even though Maradona left Independiente to advance his career in Europe, he is still an idol for the club’s fans and his participation on the team is something that the City of Avellaneda feels proud of. In brief, Maradona is an icon of Argentine football and one of the most outstanding players in Independiente’s history.

This becomes even more complex due to the lack of credit and sources, something rooted in these artificial intelligences’ DNA that, in order to learn, feed themselves on different sources that they weigh, but do not always disclose. Whom should we quote if we were using an answer provided for the AI in certain work as thousands of people already do? Perplexity.ai, another platform based on OpenAI technology, proves that although difficult, quoting is possible.

Understanding how these systems work, which learn from the interaction of its millions of users, it is possible that the quality of response improves and, if OpenAI agrees, information on where every fact or statement comes from be disclosed, with all the challenges this may pose to rights.

In regards to the latter point (ChatGPT as a reservoir of disinformation), things are more complex, since the platform itself warns users that it may create incorrect information.

For this article, we instructed it to write a misinforming story and even though it resisted it at the beginning (which at least shows that OpenAI is aware of the issue), after trying several times, it ceded to our request and the results were fairly convincing.

An extreme example of this can be found in two investigations from Newsguard (reposted by Chicago Tribune) and ElDiario.es, where they made ChatGPT free from the imposed boundaries and write whatever it was asked to, regardless of how violent or misinforming it was. Of course, most regular users won’t do this, but those who create disinformation have hands, money, and time to force these systems to their benefit.

Ultimately, why is this tool raising concern in terms of disinformation? Although ChatGPT is free to date, it is not widely known yet. Microsoft wishes to include it in the Bing search engine soon, which would make it increasingly accessible and present. If these safeguards, aimed at preventing harmful use of the tool, do not work properly, the chances of creating websites with misleading information–one of the ways disinformation creators have to attract audiences with their content–increase.

Another reason to keep an eye on the development of ChatGPT is that based as it is on several sources, and the fact that the Internet over-represents developed and English-speaking countries, there are high chances that results in Spanish or Portuguese, just to name two main languages in our region, undergo even fewer check-ups than those results in the OpenAI’s mother tongue.

This is why, among other reasons, regional and global fact-checking organizations are constantly driving monitoring actions and investigations on these tools and from here, we will be constantly checking it so that results don’t misinform and the peculiarities of our region and languages are taken into account.

In essence, we will check it as we check human intelligence. We hope that OpenAI and the rest of the AI environment are clear that disinformation is a crucial problem and they act towards it.

—

This article was originally published in the print edition of the newspaper La Nación (Argentina).

Comentarios

Valoramos mucho la opinión de nuestra comunidad de lectores y siempre estamos a favor del debate y del intercambio. Por eso es importante para nosotros generar un espacio de respeto y cuidado, por lo que por favor tené en cuenta que no publicaremos comentarios con insultos, agresiones o mensajes de odio, desinformaciones que pudieran resultar peligrosas para otros, información personal, o promoción o venta de productos.

Muchas gracias